Logistic regression is a very powerful tool for classification and prediction. It works very well with linearly separable problem. This installment will attempt to recap on its practical implementation, from traditional perspective by maximum likelihood, to more machine learning approach by neural network, as well as from handheld calculator to GPU cores.

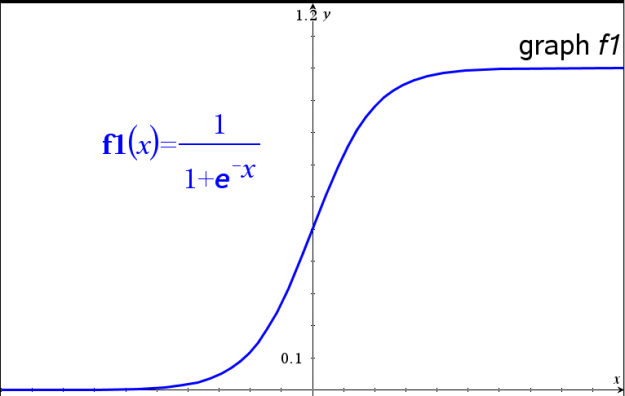

The heart of the logistic regression model is the logistic function. It takes in any real value and return value in the range from 0 to 1. This is ideal for binary classifier system. The following is a graph of this function.

TI Nspire

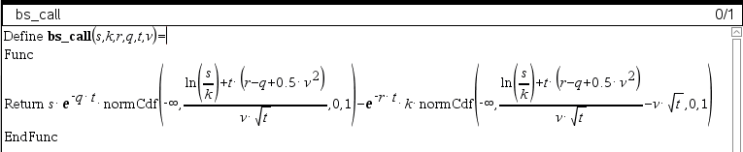

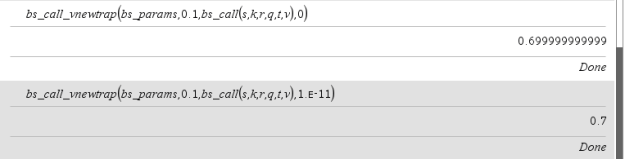

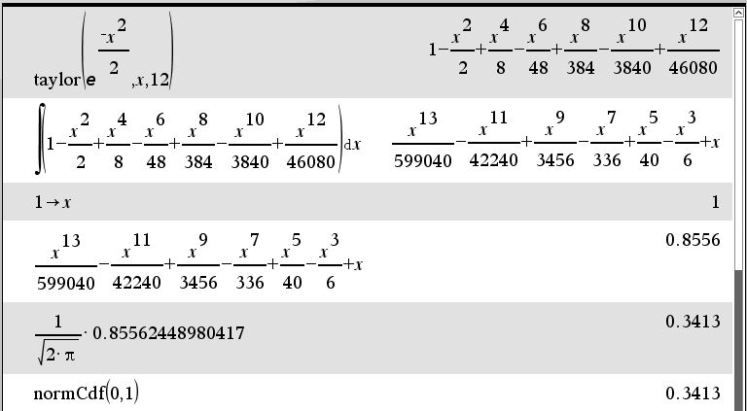

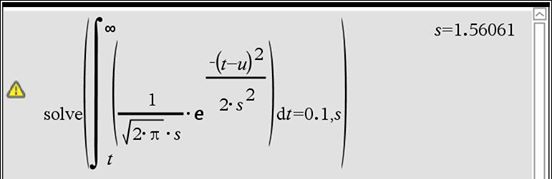

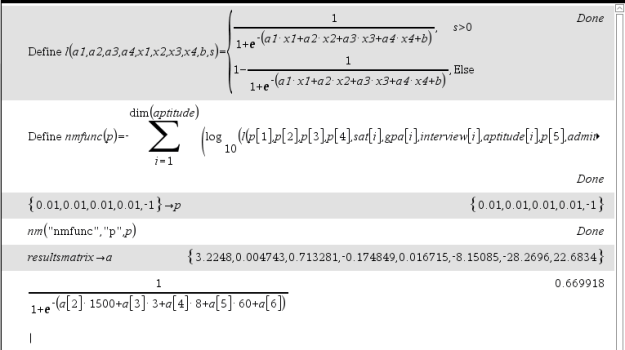

In the TI Nspire calculator, logistic regression is provided as a built-in function but is limited to single variable. For multi-valued problems, custom programming is required to apply optimization techniques to determine the coefficients of the regression model. One such application as shown below is the Nelder-Mead method in TI Nspire calculator.

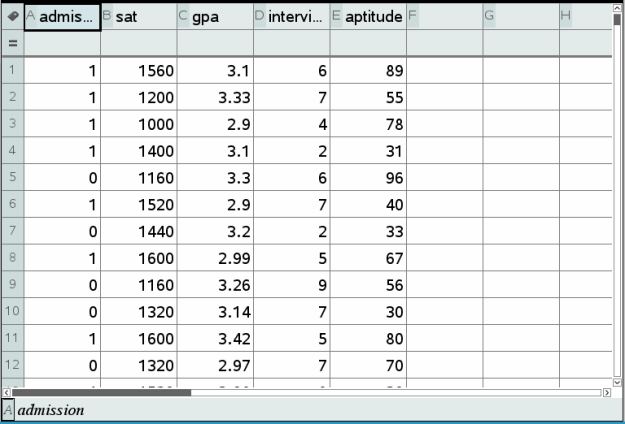

Suppose in a data set from university admission records, there are four attributes (independent variables: SAT score, GPA, Interview score, Aptitude score) and one outcome (“Admission“) as the dependent variable.

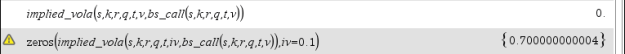

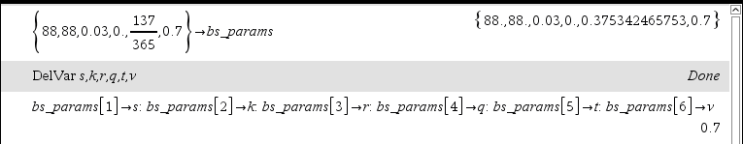

Through the use of a Nelder-Mead program, the logistic function is first defined as l. It takes all regression coefficients (a1, a2, a3, a4, b), dependent variable (s), independent variables (x1, x2, x3, x4), and then simply return the logistic probability. Next, the function to optimize in the Nelder-Mead program is defined as nmfunc. This is the likelihood function on the logistic function. Since Nelder-Mead is a minimization algorithm the negative of this function is taken. On completion of the program run, the regression coefficients in the result matrix are available for prediction, as in the following case of a sample data with [GPA=1500, SAT=3, Interview=8, Aptitude=60].

R

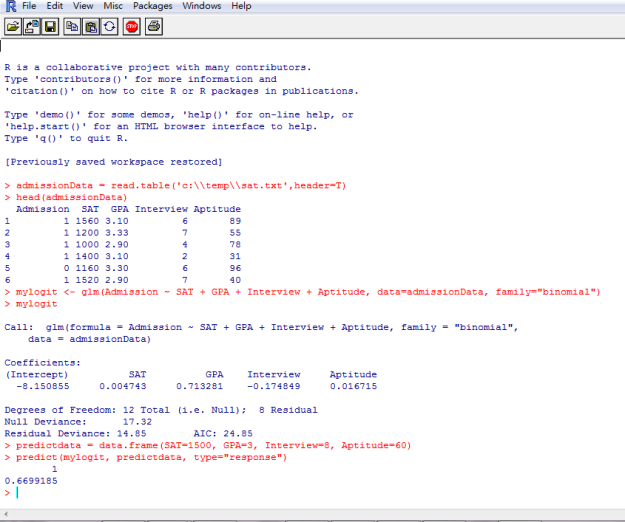

In R, as a sophisticated statistical package, the calculation is much simpler. Consider the sample case above, it is just a few lines of commands to invoke its built-in logistic model.

Theano

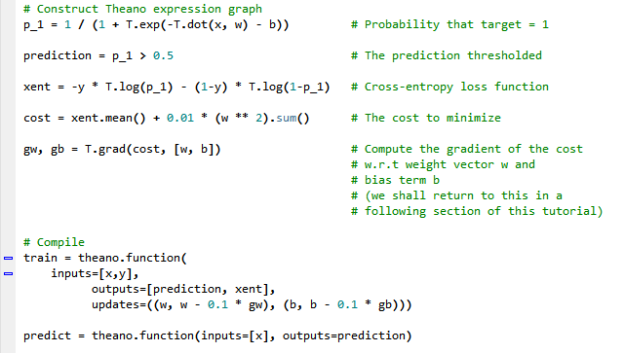

Apart from the traditional methods, modern advances in computing paradigms made possible neural network coupled with specialized hardware, for example GPU, for solving these problem in a manner much more efficiently, especially on huge volume of data. The Python library Theano is a complex library supporting and enriching these calculations through optimization and symbolic expression evaluation. It also features compiler capabilities for CUDA and integrates Computer Algebra System into Python.

One of the examples come with the Theano documentation depicted the application of logistic regression to showcase various Theano features. It first initializes a random set of data as the sample input and outcome using numpy.random. And then the regression model is created by defining expressions required for the logistic model, including the logistic function and likelihood function. Lastly by using the theano.function method, the symbolic expression graph coded for the regression model is finally compiled into callable objects for the training of neural network and subsequent prediction application.

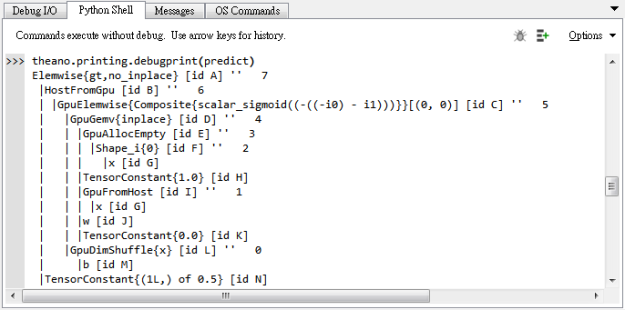

A nice feature from Theano is the pretty printing of the expression model in a tree like text format. This is such a feel-like-home reminiscence of my days reading SQL query plans for tuning database queries.